People will be upset - NVIDIA RTX 4090 Benchmarks

- A2K

- Nov 24, 2022

- 6 min read

Updated: Aug 29, 2023

The GPU season is back on, with Nvidia releasing this top of the line RTX 4090 for us to fight about. It is 1599 US dollars and hella big, so let’s see if it's any good for that much money.

I’m sure everyone is curious to know what is actually new under the hood. Now it has way more Cuda cores totalling over 16 thousand. They have new streaming multiprocessors that NVIDIA claim deliver up to 2x performance and power efficiency, next gen Tensor and RT cores as well as a considerable increase in the amount of them.

The next big thing that Jensen focused on stage is DLSS 3, which helps generate entire new frames in comparison to DLSS 2 that works to upscale lower quality frames. The most interesting part here is the ability to use them together, making it possible to upscale the image and also generate extra frames to really push the performance.

And last but not least is the new 8th Generation NVIDIA Encoder or as most people probably know it - NVENC, which now supports AV1 encoding, NVIDIA went and installed two of them on this card and spoiler alert - it kinda rocks!

While we could probably talk a whole hour about all the changes with this next generation of cards - I am sure you want to see if those huge performance improvements that NVIDIA was boasting about are actually true.

For our tests we are using the new AMD Ryzen 7700X CPU and we honed in on the higher end cards starting with RTX 3080 12GB, the Radeon RX 6900 XT and the new RTX 4090.

So let's jump in first to check out more standard rasterized performance and then performance with DLSS turned on with a quality preset, which has the least amount of detail lost.

In the good old Shadow of the Tomb Raider, with 1440p resolution there is 81% improvement on average FPS from the RTX3080 and 50% on the 1 percentiles. RX 6900 XT here is a pretty close representation of where RTX 3090 could have been. What is interesting here is the FPS per Watt. The Radeon card is pretty efficient at this resolution but the new RTX4090 is still 29% more power efficient.

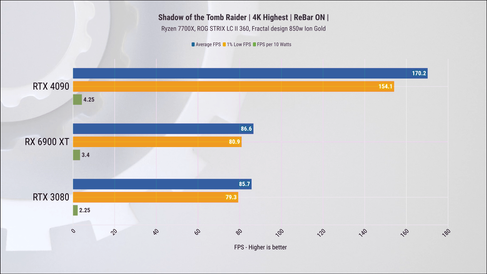

Moving up to 4K resolution we see both last gen cards are pretty even while RTX4090 has almost twice the average FPS and about 90% higher 1 percentiles. In comparison to 1440p, the FPS per Watt has halved which is expected at the higher resolution.

Now with DLSS enabled we gain 37% performance on the RTX 3080 and about 34% performance on the RTX 4090. The main difference here is that the new card still has 44% higher performance without DLSS in comparison to 3080 with DLSS enabled.

In Horizon Zero Dawn at 1440p the performance difference is not as drastic, with 4090 leading the pack by 22% on average FPS and 12% on 1 percentiles. This is kind of funny, but even in a game with high GPU usage like this - here RTX 4090 is actually making the CPU the bottleneck. When we go up to 4K we can see things change as here we have 69% lead on average FPS and 40% on 1 percentiles.

Turning on DLSS we get another 14% improvement on average FPS and 8% improvement on 1 percentiles so it’s not as drastic of an improvement as we had in Shadow of the Tomb Raider.

Next game is Fortnite and in 1440p we have 47% lead over RX 6900 XT on average FPS and 41% on the 1 percentiles which results in 38% improvement on FPS per watt.

At 4K the lead over RTX3080 is 63% on average FPS and 59% on 1 percentiles with almost double the frames per watt improvement. I was very sceptical about Nvidia claiming they were able to improve both performance and efficiency, as normally you have to make the trade on one of them. In this example, their statement stands.

Fortnite seems to work really well with DLSS - we get over 50% improvement on average FPS on both cards, and I would even dare to say with RTX 4090 and DLSS enabled - you could play this game with maxed out settings in a somewhat prosumer way. Not sure I would actually recommend playing competitive FPS in 4K with maxed settings, but, hey - if you can afford this card, the option is there.

So all of the previous examples of DLSS performance were based on existing DLSS 2 and this card clearly can output a lot of frames, but that wasn’t the main thing Nvidia was pushing during the presentation - the big one is the new DLSS 3 which actually generates new frames. While currently there are only a limited few titles that support this technology, now that I have seen its performance - I am sure many companies will pick it up.

With the games out of the way, I want to pivot to the area where I think this card will shine the most and where the price is much more justifiable - it’s productivity workloads. We will start with the Blender benchmark. On Nvidia cards we are able to test with either CUDA or OPTIX engines.

Here in pure CUDA performance we see 146% improvement in Monster between RTX 3080 and 4090. In Junk Shop it's 119% and in the Classroom test it's 136%. OPTIX results are slightly lower but still close to double the performance and in some tests even exceeding that. While these are different price point cards, that is still a huge difference.

Next we have another Blender test, but this is actual rendering time for Scandals demo. Here the new card essentially halves the rendering time from RTX 3080 and the Radeon card is just left behind.

During this test 4090 frequency actually hit past 2.7 GHm, while 3080 peaked at 2 Ghz which until recently was considered really high speed. When it comes to thermals - I was actually rather surprised. The card was creeping up to mid 50s and stayed there. In some regard it was due to finishing the render quick enough and starting to cool off, but there is another good reason for this. It is actually using slightly less power than 3080, at times keeping a 20-30 watt difference so it is certainly efficient. Us setting it to 80% fan speed was audible but did not sound like a jet taking off.

Since Vray benchmark does not support Radeon cards - we only have Nvidia on this test. The improvement in performance is very similar to Blender from earlier - we got 166% improvement in CUDA score and 120% improvement in RTX score.

The next and last test is DaVinci Resolve 18 H.265 and AV1 encoding. DaVinci has certainly worked closely with Nvidia over the years and they have been integrating a lot of Nvidia AI and Machine learning functionality into the software. I am not surprised they are one of the first to leverage the new AV1 encoder. In this test we can clearly see RTX 4090 flying through the render, especially on the 4k file. And when it comes to AV1 - the speeds are still very similar, which is pretty impressive considering it was supposed to be more taxing on the system.

While AV1 encoding for videos is great, it is even more important for streaming where it can produce higher quality at lower bitrate as compared to H.264 so it would be interesting to see how that goes as it gets more support.

It is clear to me that this new generation from Nvidia has a lot to offer. The big question I have here - is it worth it? Well, 4090 in my mind is more suited for the productivity workloads, especially if users can utilise that sweet sweet new encoder. On the other hand for gamers, this is super expensive and will be bought by people with much more spare cash than most, but they will love it. Some of you may wonder why we compared it to RTX 3080 and there are two reasons for this - first it's the closest card we have available and second reason is actually somewhat painful. We bought this card earlier in the year at the peak of the shortage at approximately 1500 US dollars and now look at it with a sense of regret, but this is actually a great thing. Since there is now plenty of competition in the market - consumers will benefit from all three players duking it out. Let us know in the comments below what you think about this card and will you be buying it?

Comments